Intro: When is My Cyber Security Job in Danger?

If you’d asked me this a couple of years ago I would have said that it’s in danger if you are not providing value to your company, your tasks can be performed by a technology or person for cheaper, and you have no transferable skills that would make you an asset to another part of the business.

This perception changed when the team I was on was made redundant in October of 2023.

The technology industry goes through waves of good and bad times depending a lot on finance and interest rates, but something that you can’t always predict is digital disruption and what decisions this will cause a company to make to increase their bottom line. The redundancy wasn’t in any way shape or form related to my technical skill or fit for the company. Having helped grow the business since shortly after they IPO’d, to them becoming one of the largest Cyber Security vendors by Annual Reoccuring Revenue (ARR) on the planet, this wasn’t ever about individual contributions, but rather was a numbers game. The perception that technology could do the work for less so long as some outputs of the team were absorbed by other parts of the business meant that this would save the company money and be the best course of action.

This was despite the technical skill and knowledge of those on the team likely being valuable to other parts of the business.

There is no doubt that this became just the tip of the iceberg for a number of other layoffs which were due to occur within the next 1-2 years. For those that were impacted by recent layoffs, please know that it’s not reflective of you, your output, worth, or impact you have had at the company.

Layoffs Serve the Shareholders

Roger Lee has done a great job in documenting the most notable tech layoffs over the years, including more than 127 tech companies throughout 2025 so far which have got rid of more than a total 59,000 employees.

Most of these layoffs aren’t the result of a non performing company or employee. In fact some of the companies have never had a higher Annual Reoccuring Revenue or market cap at the time of laying off employees. As these businesses grow, so does the demand for higher growth every year which becomes exponentially more challenging, and that makes it harder to earn more money for the shareholders.

The reality is that layoffs happen all the time in the tech industry, but Generative AI (GenAI), Large Language Models (LLMs), Retrieval Augmented Generation (RAG), and Model Context Protocol (MCP) innovations amongst others are all going to increase the rate of roles changing or being made redundant. From here on out the combination of these and emerging technologies will simply be referred to as AI.

AI Could Never Take My Job

It doesn’t matter who I talk to or what industry they’re in, there’s some level of resistence to AI with a belief that it will never be able to do an aspect of someone’s role as good as a skilled human. Despite this it seems that every industry acknowledges some level of practical use for AI in streamlining or augmenting part of the work which would have traditionally been performed by a person.

Some examples that touch on this concept are below. I challenge you to have a think about what other statements like these you’ve heard:

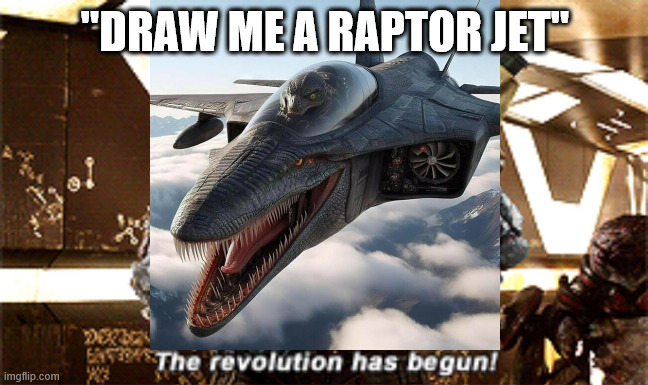

- “AI can generate art because it was trained on copyrighted material, but it will never have that creative soul that makes something truly a piece of art”

- “AI may be able to do some basic programming, but it will never be able to refactor code properly or make code without vulnerabilities”

- “AI hallucinates so will never truly be able to do tasks like mathematics or solving problems it’s never encountered before”

- “AI may be able to analyse a situation sometimes, but other times it makes things up and is just completely wrong”

- “AI got

Xwrong, so it will never be suitable to performYtask”

This presents an interesting scenario: AI is seen as helpful in undertaking many tasks, but is not yet at a level of taking your job.

The catch here is that it doesn’t need to perform everything that a human does, and it will never truly take your job, but it doesn’t need to. It just needs to be good enough to put your role in a company in danger of being drastically changed, obsolete, or not worth the amount your employer is paying you.

What is Good Enough AI?

In a previous post I spoke about HISAC - High Impact Security Analysis and Communication, and a part of this was the concept of Fast, Good, and Cheap - Pick Any 2. Short of delving into this concept again, it’s worthwhile thinking about how this applies to the work you do on a daily basis and ask yourself some questions:

- “Can this task be done faster using some specially crafted prompts to an AI agent?”

- “Will the output be

goodenough to build upon or use as is to achieve the desired outcome?” - “How much will this cost me now and in the long run?”

It may become increasingly clear that so long as you have enough foundational knowledge on a topic, you can often get a Fast, Good, and Cheap outcome at a fraction of the time and cost it otherwise would have taken. Sure you may need to do some refining around the edges and validating the output to ensure it is factual, but much like the Internet became a general tool applicable to supporting every industry in some way, shape, or form, AI is going to play a similar role in the future (Here’s an interesting read on general-purpose technology and the impact it plays on our society, both good and bad).

Even now this blog post is likely being scraped, indexed, and used to train a large language model that will be used to displace or change jobs in the future.

The gist is that those who can correctly work with the technology will be far better off than those who can’t, and this is going to have some unintended consequences as it relates to entry level jobs and certifications.

Entry Level Cyber Security Jobs and Certifications

In the past decade working Cyber Security I’ve noticed some clear work-related changes. Some of these changes have been exacerbated by AI:

1 - Entry level knowledge now is different to entry level knowledge back then.

If we look at entry level roles a decade ago, it was a time when the term mega breaches was beginning to be popularised. Companies began having large scale data breaches that lead to hundreds of millions, and even billions of records being leaked, and Mimikatz had just a couple of years prior entered the public hacking scene making it easier than ever to steal passwords. CryptoLocker one of the earliest ransomware variants to use cryptocurrency for payments had been on the scene for a few years, and this had just been superseded by CryptoWall.

All of this made for a developing industry that, although it had been evolving over some time, now increasingly required dedicated security resources to help make risk-based decisions in an organisation. Back then if you wanted to become a Security Analyst, you could learn the fundamentals of networks and operating systems, work as a systems or application administrator, and hopefully without too much effort if you were in the right place at the right time transition into a risk management or technical role.

Nowadays having foundational knowledge isn’t often enough to secure your first role. A number of questions or tasks that may have been previously completed by an entry level analyst, are also now being done cheaper with the support of AI.

2 - Entry level roles are becoming increasingly scarce.

Much like the above, most advertised roles require some level of pre-required professional experience. This makes for a chicken and egg scenario where simply going for a role means that you need experience to get experience. Trust is increasingly valued in this industry, and with AI making it easier than ever for unskilled individuals to come across as somewhat knowledgeable in a domain, knowing who you can trust is often a deciding factor when employing someone new (and this is exacerbated by current North Korean IT workers)

3 - Certifications are becoming increasingly devalued.

With the profitability of industry certifications leading to new providers, lack of regulation, people cheating on certifications, and greed of big named certification providers charging increasing amounts for their material, this has paved the way for certifications in themselves becoming less valued in the industry, with relevant industry experience often playing a larger part in whether a candidate is successful or not.

4 - Industry professionals are using AI as a shortcut, but they’re creating incorrect slop as a result of their inattention to detail.

If we look at some popular posts on LinkedIN, many of these are completely created using AI, and some even contain AI generated infographics which are downright wrong, sloppy, and harmful to the industry as a whole. This lack of care in quality begs the question, if you don’t have the technical knowledge or care to review your AI generated material, what are you actually providing to a company besides sloppy AI prompts?

The Industrial Revolutions

Ever since the first Industrial Revolution in the 17 and 18 hundreds, we’ve gone all the way to a fourth industrial revolution almost a decade ago at the time of writing. This fourth revolution is all about intertwining technology with our everyday lives, and has also been designated as the Imagination Age where analysis and logical thinking is to be left with machines whereas creativity, care, imagination, social and emotional intelligence, and all those things which make us human are said to be the jobs of human workers.

Even with advancements in AI leading to digital content such as pictures and videos being crafted out of thin-air using just the right technology and prompt, there’s some things that even with current AI technologies require human input to succeed where machines will not.

- Creativity: This is something that technical certifications cannot teach you. It’s what gives your content a

feelorheartby creating something different to everyone else in your style. - Care: No matter what AI creates, it’s bound to hallucinate at some point and get parts wrong. Without taking care in what it produces and verifying if anything is wrong you will always risk sacrificing quantity to quality, and most people will notice. If you don’t care for your community or output you will always produce a subpar result.

- Imagination: One could argue that this is done with AI based on a given prompt, but it’s those who think about

what ifandwhat could bethat will continue to have impact in an organisation’s output. - Social and Emotional Intelligence: With companies making people redundant without a second thought just to make more billions of dollars profit, it shows an evident lack of emotional and social intelligence. At the end of the day people may not remember what you’ve done, but they will remember how you made them feel, and many companies have now forever gone into the

bad booksof the hearts and minds of people around the world.

If everyone was to stop: generating new content, being creative, taking care in their output, and sharing with the community, then it’s interesting to think what would happen to Generative AI and Large Language Models in the future, as this is ultimately what humans create that bring AI technologies to life.

If you are a good person with empathy, adopt to technological changes, and put care, imagination, and creativity into your work then you will be well placed as we head into the future of Cyber Security jobs. This this still doesn’t guarantee you will be safe. As with any tech job it’s unlikely that you will get let go tomorrow; however, external factors can definitely increase the chances of this happening without you realising it. At the end of the day, job security is a myth.

The Future and Company Short Sightedness

Although it may seem like the jobs we have now will be drastically reduced, the World Economic Forum’s Future of Jobs Report 2025 expects 92 million jobs to be displaced, but a further 170 million jobs to be created.

I want to stress that nothing is going to change overnight, and this will all still take time as businesses are generally slow at adopting new technologies and ensuring the right use-cases are there for them.

An interesting perception of what the office may look like in another 5 years is that from Adam Tinworth - NextConf and Martin Recke - NextConf. Specifically the following quote gives much to think about for tech leaders considering their next round of layoffs in the hope of lining shareholder pockets.

If the number of jobs does grow, and most of those jobs are dependent on new skills, that’s going to be a recruitment and a retention challenge. And if, then, something happens to impact the availability of labour, then we’ll see a big shift in the power dynamics of the workplace.

Maybe the above statement misses the mark, or maybe it’s cheaper for a company to layoff talent and then bring in and train new talent in the future, but one thing is for sure, you don’t build trust or make friends when you throw someone to the wolves without any consideration for their worth as not only an employee, but also a person.